Configuring models

Language Model Configuration

Aide comes pre-packaged with a few language model defaults that are always best-in-class, and requires zero configuration at your end to get started. However, if you do wish to use a language model of your choice, or even choose a provider you prefer, you can configure this in Aide.

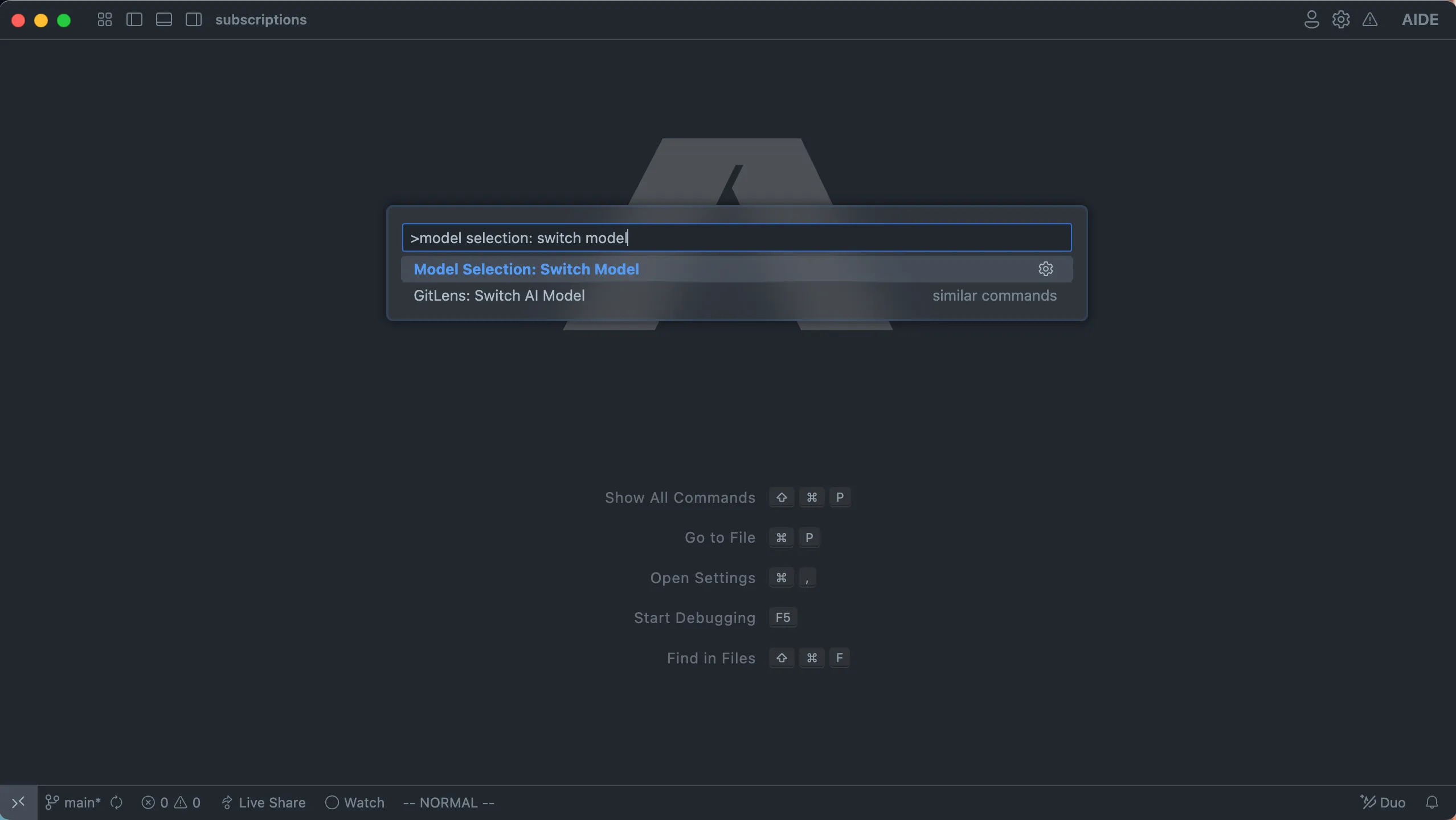

Quick Model Switching

You can quickly switch between models using:

- Keyboard shortcut:

⌘/Ctrl + K + M - Command palette: Search for “Model Selection: Switch Model”

Recommended Models

For hosted solutions:

- Claude Sonnet 3.5 (by Anthropic) is currently the best performing model.

- For self-hosted or OSS models, we recommend Qwen models, which have shown excellent performance across various editor scenarios in our testing.

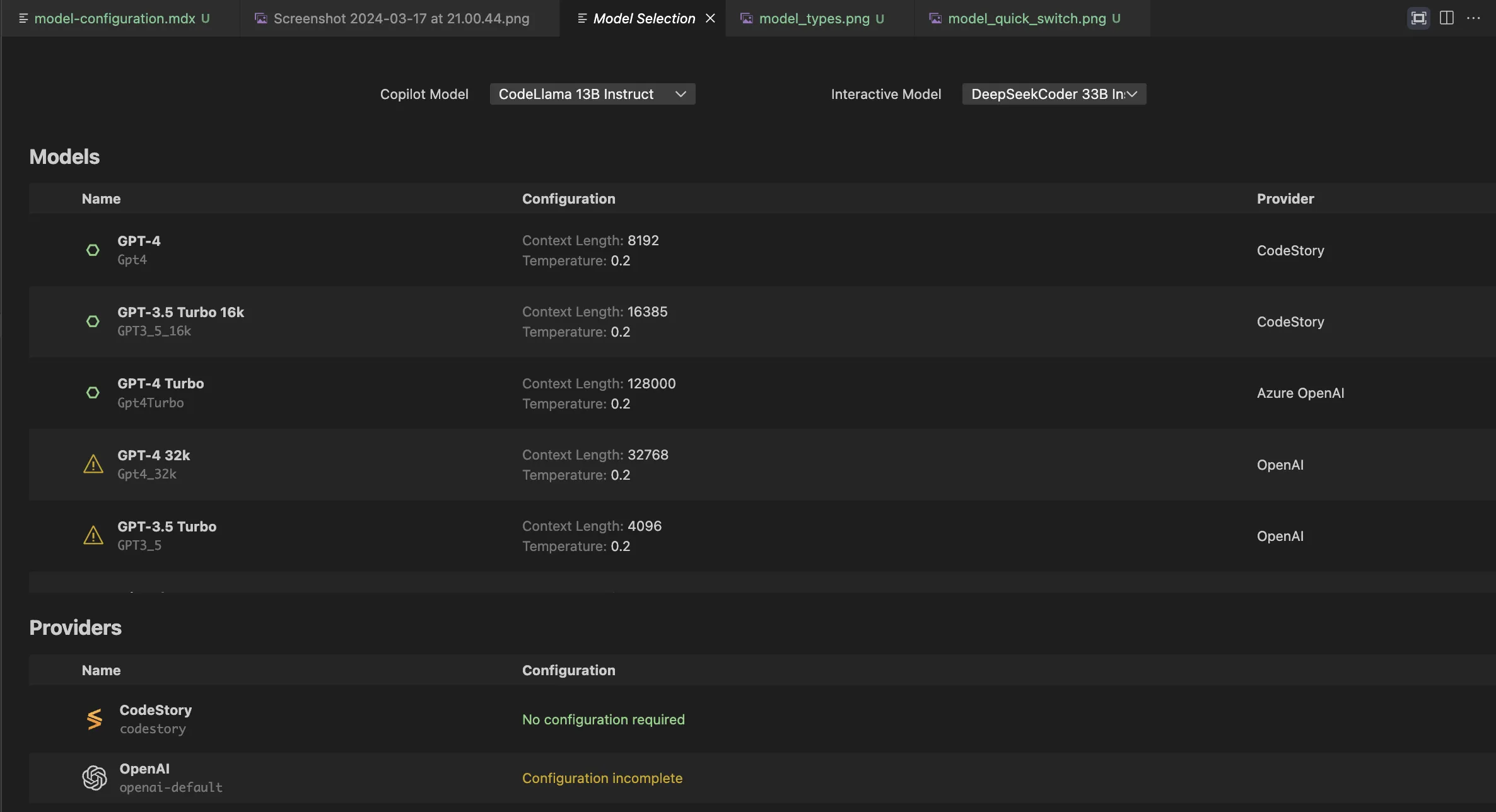

Provider Setup

Access provider settings by pressing ⌘/Ctrl + Shift + ,. You’ll see a configuration page like this:

Below are the supported providers and their setup instructions:

Anthropic

- Get your API key from Anthropic’s documentation

- Enter the API key in the Anthropic provider section

OpenAI

- Obtain your API key following OpenAI’s guide

- Optionally set a custom API base URL for private deployments

Azure OpenAI

Required configuration:

- Deployment ID

- API URL

- API Key

TogetherAI

- Create an account and get started using their quickstart guide

- New users receive $25 in free credits to experiment with different models

Ollama

No configuration needed - just ensure Ollama is running in the background.

LMStudio

Configure the local URL if using a custom port for the LMStudio API server.

FireworksAI

- Generate your API key following their quickstart guide

- Known for fast inference speeds - recommended for copilot-style models

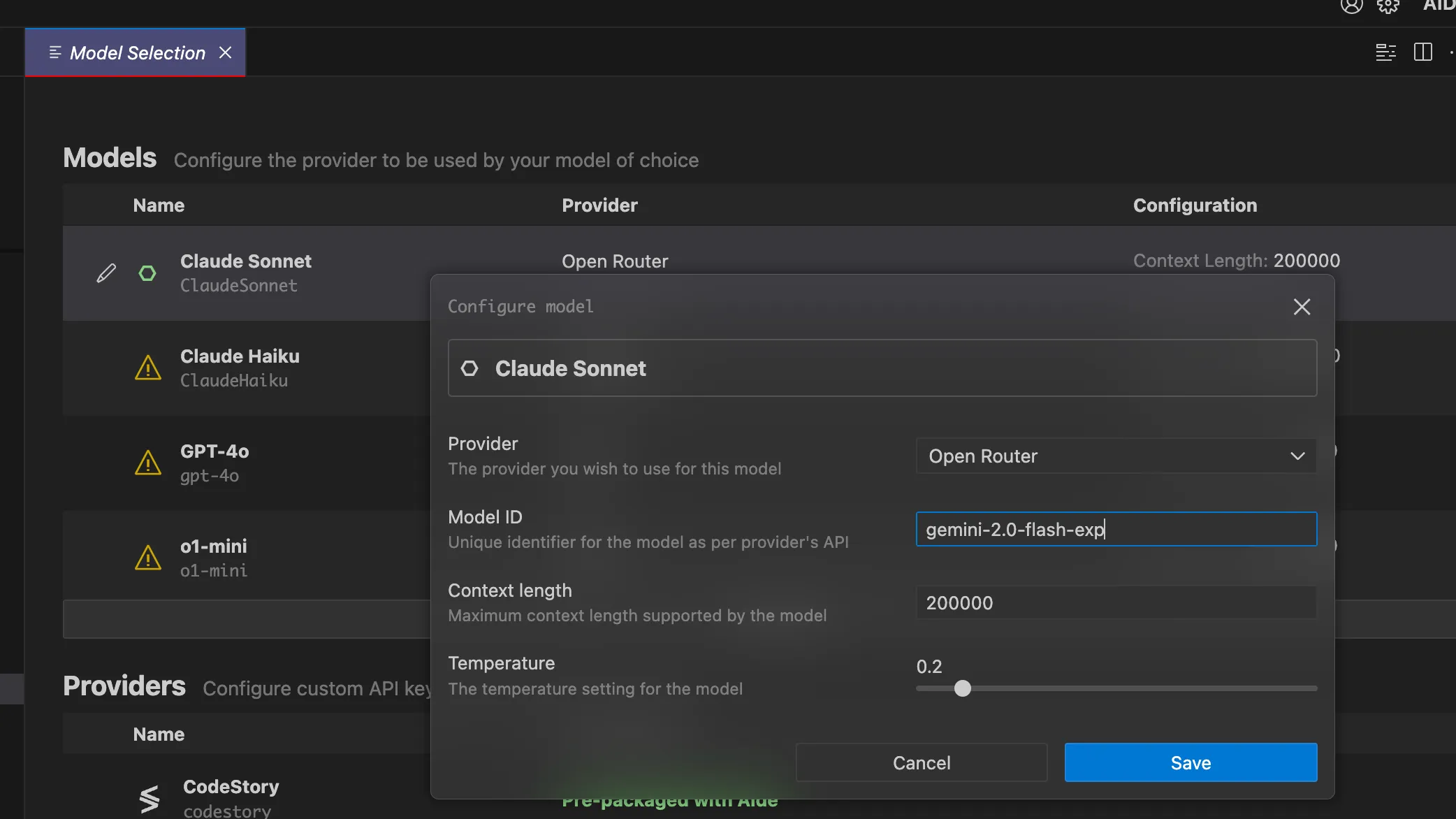

Custom Model Configuration

For services like OpenRouter, you can customize model IDs:

- Select any model from the model selection page

- Rename it and update the model ID as needed

Example: Configuring Gemini-2.0-flash model:

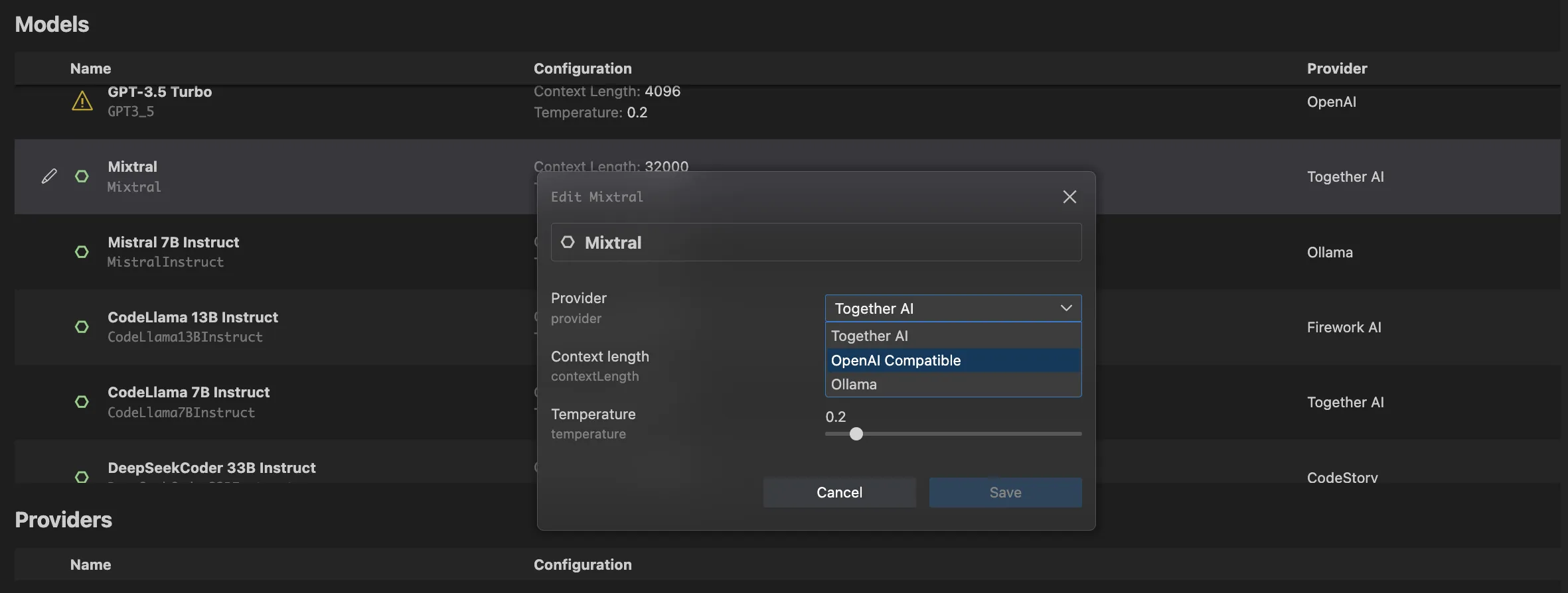

Switching Providers for Models

You can run the same model through different providers:

- Click the edit button next to your model

- Choose your configured provider from the dropdown

Need help with a provider not listed here? Join our Discord community - we’re happy to add support for your custom infrastructure and help with setup.